Not long ago I wrote about GenAI and kids and what we do in my own family to try to make them useful and avoid the pitfalls. It feels weak now, even just a short time later. As I’ve looked into this more, the numbers and trends are disturbing. More kids are dying or having other bad things happen. It’s not just the usual “if it bleeds it leads” news cycle impinging on my personal awareness. More incidents are happening. And what we’re facing as parents is getting worse fast across multiple venues.

Any of us building digital products, (parents or not), should have some awareness of what’s going on regarding the widening and deepening digital threats faced by kids and do what we can do to deal with it, though this writeup is targeted more at parents. Yes, it might be true that we all have digital and online risks, but of course, kids are all the more vulnerable. I’m writing this because I’ve been having more conversations about this lately with other parents, especially some that don’t work in digital.

Also, some motivation for writing this is based on my own recent increased exposure to multiple unfortunate events. Some of this is just media that found its way to me, (probably based on targeting due to recent writing and searches), but also some local events in my town that were shocking, even if not necessarily surprising. (Sadly.)

Parenting challenges are unlike any we’ve ever had before. It’s always been true parents have had to navigate rough terrain; from just growing pains to drugs and sexual matters, and all the other usual things. When consumer online came along, (around the late 1980s and early 1990s as a practical matter), things changed. Mostly in clear ways. Because one advantage parents had at that time was access could be more easily limited. Typically it would be a switch; you would be online or not. Now? Digital is ambient, as are are communication channels. And the comm channels are embedded in all kinds of places, even some we might not expect, for example, deep within game play and such.

It’s a problem. A real and growing problem. I need to get to some of the main points, but I just feel compelled to express what disturbs me most about all this. I think anyone can understand how every so often there’s some evil or disturbed person out there. But the prevalence of all this? How common? That feels especially disturbing. Regardless, this is what we have to deal with. Following is a long list. And it’s not even as extensive as it could be. But I’ve tried to hit what I see as the major threat categories and offer some practical suggestions for what to do to at least try to mitigate these issues.

As we go through them, it’s worth noting the obvious at the outset. Strict control is likely an illusion. Not only is it most likely impossible, but building awareness, confidence and skills navigating the world will be more effective overall. It’s a balance. Of course, age and maturity matter. Certainly for younger kids, control where possible can be a valid starting place.

AI

AI is not just another content source. It is interactive, persuasive, and emotionally responsive, or at least sometimes seemingly so. Just to level set, when I say “AI” in this context, I’m talking about the interactive generative AI models. Yes, there’s tons of other types of machine learning and AI. But at this point, the typical person’s mention of AI means a large language model that chats.

One of the most dangerous misconceptions is that AI is “just software.” Many are likely familiar with the idea of “AI hallucination”. Large language models frequently hallucinate and according to Live Science it’s getting worse. Now, others say things are getting better. The reality, (in my opinion), is this will continue to vacillate as new versions come out. Also, as more “agentic” tools, (which integrate info from multiple sources), will create even more new challenges here. These tools produce fluent, confident answers that are factually wrong, yet users consistently over-trust them because of how authoritative they sound. And that goes for adults too! Still, children are especially vulnerable because they lack the experience to question confidence itself.

Beyond factual errors, AI introduces emotional risk. As I wrote in AI GPT Safety & Issues for Kids, conversational systems can mirror a child’s emotional state without understanding whether that state is dangerous. If a child expresses despair or distress, an AI may validate those feelings without redirecting or escalating, something a responsible adult would instinctively do. On the other hand, some systems are working on understanding “affective” behaviors, such as voice tone or expression, to assess emotional state. But how accurate this might be or how it might be used? We don’t know yet. This will be just “another thing”.

Meanwhile, from MissingKids.org, “Already this year, the National Center for Missing & Exploited Children (NCMEC) has seen sharp increases in new and evolving crimes targeting children on the internet, including online enticement, use of artificial intelligence and child sex trafficking.”

Finally, AI collapses the idea that “seeing is believing.” Voice cloning and image generation allow realistic impersonation, undermining proof itself.

What Parents Can Actually Do

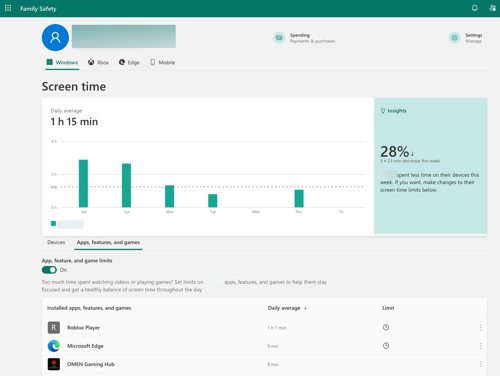

- Use OS-level controls (Apple Screen Time, Google Family Link, Microsoft Family Safety) to restrict AI-enabled apps and search results. (There’s a list at the bottom of this article.) Just note that they may have multiple devices. If you limit a computer’s screen time via MS Family Safety, that won’t do anything for the separate iPad. Controls vary across services and you may have to dig for them. For example, for Google you have to have family apps working in the first place, then turn on safe search and limit browser use to Chrome. This won’t eliminate, but can reduce AI summaries. (See reference links at bottom of this article.)

- Audit apps after updates for newly added AI or “assistant” features.

- Discuss ethical AI use explicitly, including peer misuse (See my article: Teach Your Children (AI) Well).

- Reinforce that AI is not a friend, therapist, or authority. Though just telling them might not help. There is just no substitute for occasionally checking in.

Suicide, Self-Harm, and Emotional Escalation

This is an uncomfortable topic, but the most critical.

The U.S. Surgeon General has warned that algorithmic systems can exacerbate youth mental-health risks, particularly through exposure without intent. Kids can easily encounter harmful content because systems surface it, not because they sought it out.

Conversational AI adds risk by engaging privately and persistently at moments of vulnerability. Research in the Journal of Adolescent Health shows repeated exposure to self-harm content can reinforce ideation in at-risk youth. AI companies talk about working on such things, but the industry clearly isn’t even sure exactly how to detect or handle these issues yet.

Peer dynamics amplify this danger. UNICEF’s 2025 reporting shows children experiencing online bullying exhibit significantly higher levels of anxiety, suicidal thoughts, and self-harm across more than 20 countries. The UN reports that roughly 80% of children surveyed feel at risk of peer violence online. Legislation like the GUARD Act has been introduced in the U.S. Congress to ban minors from using AI chatbots and require age verification, partly because lawmakers believe current systems can manipulate emotions and behavior without adequate guardrails. This may not be desirable or practical given the valuable use cases for the technology, though it’s yet another indicator of clear recognition of threats.

What Parents Can Actually Do

- Enable platform-level safety features and alerts if they exist. For example, Meta’s Family Center. (Again, see list at bottom of article.)

- Watch for behavioral signals: withdrawal, secrecy, sudden mood shifts, but be very aware that parents who have had serious events occur have reported they did not witness a behavior change.

- Know your kids’ friends. And their parents. Or at least have contact information so parents can easily get in touch with each other if something questionable is going on.

- Normalize adult involvement when emotional distress appears.

- Even those of us adults that are tech-savvy have to assume we’re not up on the latest tricks the kids share to get around our guardrails. What it gets down to is we have to have the hard conversations. Helicopter parenting can be overly coddling and unhealthy, but some degree of active oversight, even when it feels intrusive, is part of the responsibility of protecting kids in an environment that doesn’t default to their safety.

Cyberbullying and Peer-to-Peer Online Violence

Not all digital harm comes from adults.

Cyberbullying, repeated harassment, humiliation, threats, exclusion, or impersonation, has become constant, persistent, and algorithmically amplified. Unlike earlier eras, it follows children everywhere and often escalates publicly.

AI, deepfakes, (false but realistic imagery and video), and viral trends have made peer cruelty faster, more scalable, and harder to escape. What once stayed within a friend group can now spread school-wide or beyond in hours.

What Parents Can Actually Do

- Teach conflict resolution and digital disengagement explicitly.

- Monitor for indirect signs: reluctance to attend school, sudden friend changes.

- Docuent and report issues to appropriate authorities; school, police, companies.

- Reinforce that asking for help is not “snitching”.

- Realize just how fast this can happen. Ask kids for a daily report at dinner. What good happened today? What bad? Anyone get in trouble for anything? They won’t always tell you of course, but if you ask you may have a better shot at learning what’s going on.

- Be engaged with other parents in adult chat groups or otherwise with parent teacher organizations. You want to be in the loop if things happen at the school. It depends on the school, but you may be surprised at how clued in educators have become in these areas. There’s probably policies and response mechanisms for such things, possibly involving local law enforcement in some cases. Go to school parent events and be on the email update lists. A lot of kids have SmartPhones early. What they do with the camera capabilities is among the high risk issues. Sometimes it’s not intentional bullying; just something they might think funny. They might not understand the permanence some inappropriate photos may have, or the illegality of them.

Sextortion and Financial Exploitation

Sextortion is one of the fastest-growing and deadliest threats facing teens.

NCMEC reports a 70% rise in financial sextortion cases in early 2025, primarily targeting teenage boys. Sometimes through fake profiles on platforms like Discord and Snapchat, but could be any venue. Victims are coerced into sharing explicit images, then blackmailed, often for money, gift cards, or cryptocurrency.

Since 2021, sextortion has been linked to dozens of youth suicides globally. The speed, shame, and financial pressure often prevent kids from asking for help.

What Parents Can Actually Do

- Talk explicitly about fake profiles and rapid intimacy as red flags.

- They may ‘kind of’ know this already, but they need to realize that there’s zero guarantee of any kind of privacy once a picture is snapped or sent. These things can persist. It does not matter at all if you’re video chat is with someone you know and supposedly trust. This has to get drilled in.

- Unfortunately, it may be appropriate to share tragic stories of what has happened to others.

- The “adult” conversations may have to happen earlier.

- Emphasize: paying never makes it stop.

- Enable two-factor authentication and restrict payment methods on devices.

- Practice immediate reporting to platforms and NCMEC if something does happen.

- Pay attention to interactions with problem kids. Some kids simply lack impulse control or have exceptionally bad judgement. They may be a bully, or maybe just think it would be funny to post something inappropriate somewhere. Make sure your kid(s) know to stand up to pressure for such things, just as with any peer pressure issues. (I know. Way easier said than done.)

Digital Identity Theft and Financial Fraud

One potentially long-lasting devastating risk is perhaps one of the least visible: child identity theft.

Criminals target children’s’ unmonitored Social Security Numbers and personal data because they are “clean.” Fraud can go undetected for years until the child tries to rent an apartment, apply for student loans, or get a credit card as a young adult.

The source of compromise is often mundane: large-scale data breaches, parents oversharing personal details online (“sharenting”), or even family members committing fraud. Even if you are perfect in your digital activities, your child’s picture and name may end up in others’ feeds, on a school website, or a local news story or sports story. We have limited control over all aspects of our digital exhaust.

The FTC recommends freezing a child’s credit file as a preventative measure, yet many parents are unaware this is possible. Most children should not have a credit file at all. Freezing ensures it stays that way. If a credit file already exists, that’s a red flag worth investigating

This risk is compounded by financial manipulation: highly targeted ads, influencer content, in-game “pay-to-win” mechanics, and loot boxes that exploit developmental vulnerabilities and normalize gambling-like behavior.

What Parents Can Actually Do

- Freeze your child’s credit with all three bureaus (links to their kid credit freeze info: Equifax, Experian, TransUnion) You will need to give up some personal info for both child and your own to do this, but they already have most of this anyway; at least for adults.

- Limit sharing of personal details and milestones online, though this can be challenging as let’s face it; most of us do this now as it’s the easiest way to share with family and friends.

- Lock down in-app purchases and payment methods.

- Teach kids the basics of financial scams early.

Gaming, Apps, and Embedded Cybersecurity Risks

Modern games are not just games; they are social and financial platforms. Most games likely started out with some founders thinking something or another might be a really fun idea. Unfortunately, as some of these venues have become part of a multi-billion dollar industry with millions playing, risks have come along, including both financial and child predators.

Beyond grooming, kids face phishing links, fake mods, malware, and scams designed to steal credentials or trigger unauthorized purchases. Many teens can controls using VPNs or other means because they’re either just more sophisticated than their parents or just where there’s a will there’s a way. Thus, they expand risk further. Again, from MissingKids.org quoting an NCMEC report: “Violent groups are targeting kids on publicly available messaging platforms, including Discord, Roblox and gaming sites. Initially offenders befriend them and initiate an online relationship, then force their young targets to record or live stream acts of harm against themselves and others.”

What Parents Can Actually Do

- Enable two-factor authentication on gaming accounts where possible. (Or if such parental tools are not available, consider dis-allowing the app, even if “all my friends play.”) You should own the admin account and create kid accounts wherever possible.

- Block unofficial mods and downloads. This may be challenging. These typically come from links in game chat or Discord, YouTube descriptions (“free skins,” “aimbot,” “FPS boost”), third-party mod sites, fake installers posing as mods, Google Drive / Dropbox links. These are a top malware + scam vector for kids. Review purchase histories regularly. You have to check all platforms and where there restrictions are. And make sure that you use non-admin user accounts for the kids on their computers. One simple rule? “If a mod requires downloading an installer, it’s a no.”

- Treat chat-based links as hostile by default.

- Check who’s on chat lists. Even if you tell your kid, “only put people on your list you know,” their definition of who they “know” might be someone online that they don’t know in real life, (IRL). They might have gone on some quest or teamed up with someone in a game, even if they don’t have access to chat. Then they get invited to some other private server. Once they’re familiar with playing with someone, they think they “know” that person just because the screen name / player tag has become familiar to them over time.

- Understand that some games may have things called “private servers,” but they’re really not. Anyone can still invite anyone to these things.

- Note that even if you have chat turned off completely, there may still be ways to communicate. Some games allow creation of game assets, including little signs. Well, you can put custom text on those.

- Tell them in no uncertain terms to not share any personal info at all to anyone. This seems obvious, however some things happen more subtly. If something pops up on screen asking for anything, (even if it looks like it comes from a system), they should come ask you. Sometimes a service will legitimately try to check for age appropriateness and require some kind of info. But that’s your job to decide what to share. They need to have this clarified. Or they think they’re just in chat with friends they know in real life. But they’re on someone else’s private server in a game or there’s others that kind of snuck in some how; probably by invite from another friend. The answer is to just never put any such info into any kind of online chat.

I’ve seen the CEOs of several gaming companies interviewed about some of these issues. They all claim to have some controls in place and are working on more. The reality is likely the same as everything else. There’s no magic solution. Parental controls remain sloppy and bad actors will likely always find ways around things. If we want to offer these products to our kids because they have value, what it keeps coming back to is our own attention to what they’re doing. Knock on the door. Sit and watch some game play. Checking browser history isn’t enough. Look for any “hidden worlds” or other venues where they might be interacting. (User-generated spaces, Mods, Side channels, External communities, Parents often don’t realize these exist unless they look.)

Virtual Reality (VR) and Augmented Reality (AR)

VR and AR are rapidly becoming new digital playgrounds.

These immersive environments introduce unique risks: exposure to adult content, virtual harassment, biometric data collection (eye tracking, motion data), and physical effects like eye strain or motion sickness in developing visual systems.

Experts recommend strict age limits and close supervision. For better or worse, (likely both), there’s not a lot we can do about usage by others.

What Parents Can Actually Do

- Enforce age minimums (generally 13+).

- Use child-specific profiles and safety settings.

- Limit sessions to 20–30 minutes.

- Supervise early use, not just setup.

Harmful Viral Challenges and Trends

Not all dangerous content looks dark.

Viral challenges often encourage risky behavior for social validation. Recent trends have caused hospitalizations and deaths, with safety analyses reporting a sharp rise in ER visits since 2023.

Algorithms reward novelty and escalation, not safety.

What Parents Can Actually Do

- Talk openly about peer pressure and reputation incentives.

- Encourage skepticism toward “everyone’s doing it” narratives.

- Discuss real-world consequences early.

- Sometimes, just this awareness can help. A lot of the kids aren’t stupid, but they don’t necessarily engage their brains! Sometimes all it takes is just having them clued in to enough awareness that they stop for just a second to question something.

Ideological, Hate, and Propaganda Content

Algorithmic systems can rapidly funnel kids into ideological echo chambers; misogynistic, extremist, or racially hateful, especially through gaming communities and video platforms.

Children lack the historical context and critical thinking skills to recognize propaganda when it’s wrapped in memes, humor, or grievance narratives.

What Parents Can Actually Do

- Teach digital literacy as a core life skill.

- Help kids distinguish opinion, manipulation, and evidence.

- Discuss how algorithms amplify outrage.

- Encourage exposure to diverse viewpoints.

Conclusion

The danger is not one app, one platform, or one technology.

It’s the stacking effect: AI, algorithms, peer dynamics, financial systems, immersive environments, and identity risks reinforcing one another, often invisibly.

AI accelerates everything, good and bad. Parenting has always been hard, but the pace, intimacy, and opacity of today’s digital environment are unprecedented.

This isn’t a call for panic. It’s a call for attention.

And real, human attention is still the most powerful safety tool we have. It’s arguably the most important thing we have. It’s already challenging to manage our own ID and passwords. So adding more for kids is yet another level of effort. Managing all this is a time consuming hassle. But where they exist, using whatever protective tools platforms do have is a layer of protection. Sometimes just a thin layer, but maybe that’s enough to avoid a problem. We do what we can with what we’ve got.

See Also:

- Microsoft Windows Computers Family Safety

- Google / YouTube Family Link – You’ll have to install an app.

- Apple iPhone / iPad: Settings → Screen Time → Turn On Screen Time

- Meta Family Center

- Xbox Family Settings App

- Playstation Family App

- Nintendo Parental Controls

- TikTok Family Planning: → Profile → ☰ → Settings & Privacy

- It’s a bit more effort, but you can also control things coming into your home right at the DNS level with tools like: NextDNS, OpenDNS Family Shield

Parental Monitoring Apps