Welcome back. If you’re new here, in Part 1, I went over some basic risk categories Product Managers need to be looking out when AI is an offering component.

This follow-up will cover the items in Part 2 of the outline. My motivation for writing these articles is due running into the challenges expressed in Part 1 head first and didn’t see a lot of great article coverage. For this next part, topics are already well-covered, but I’m going to run through them as introductions anyway for the sake of completing the outline and having a cohesive package for anyone new to the area.

As with so much we deal with, these areas are evolving fast. Many areas don’t have settled answers yet, and in the case of some fairness and ethical areas, might not even have the right questions yet.

Risk Category Outline for Agentic and AI

Part 1: See First Article

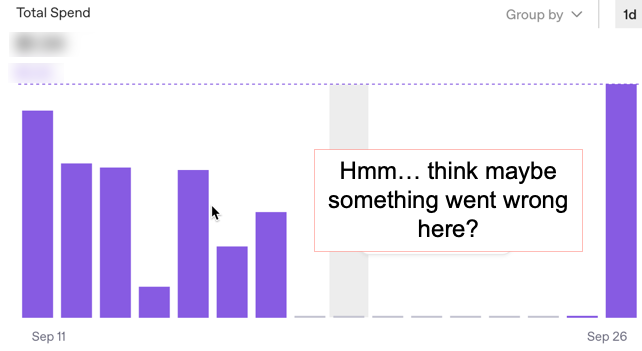

- Financial / Token Usage

- P&L

- Fraud

- Dependencies / Infrastructure Ops

- Workflow

- API

- Scrape / Oracle Endpoints

- Crypto

Part 2: Details on the following sections continues below

- Model and Output Risks

- Incorrect Answers

- Dangerous Answers

- Intentional Jailbreak

- Bias / Fairness

- Security, Governance, Risk & Compliance (GRC)

- Security (Beyond Fraud)

- Privacy / Data Governance

- Regulatory / Compliance

- Ethical / Societal Issues

Model Output Risks: Incorrect Answers

Incorrect answers are when your AI outputs wrong info, like a chatbot giving bad advice in a health app. The concern is user harm or misinformation spread, leading to lost credibility, complaints, or lawsuits if decisions based on it go awry. There’s product failure risk if accuracy dips below expectations.

As we’re evolving our experience here, we’re learning Retrieval Augmented Generation (RAG) isn’t necessarily a magic solution.

What about sophisticated prompts? Can’t these help? Maybe. Or they could make things worse. One belief is that getting the best out of AI is similar to working with any assistant. The better your instructions, the better the output.

So what’s the deal with RAG? It’s supposed to help with everything from “truthiness” to precision. for starters, embeddings could be off, and chunking strategies and more could be wrong. Data Scientist Arthur Mello points out vector similarity ≠ true relevance. We might need to do more with queries, tuning, adjusting chunking strategies and more. See: Why Your RAG Isn’t Working and what to do about it. Also, think about RAG flow… You’re typically using keyword query against a vector database to return results that are subsequently sent through a larger scope LLM. This is a filter of sorts. It’s supposed to be better, right? However, it could decrease recall. (Insofar as recall can conceptually be a metric here given we’re talking more about concepts now than specific documents.)

What about sophisticated prompts? Can’t these help? Maybe. Or make things worse. One belief is that getting the best out of AI is similar to working with any assistant. The better your instructions, the better the output. One of LinkedIn’s favorite AI article topics seems to be Prompt Engineering tips and tricks. These might be useful. But for devs who want to go next level, we can now try Microsoft’s Prompt Orchestration Markup Language (POML). Now you can build a more sophisticated, structured prompt. And stuff it into an agent or something. Is this better? Or is it just adding complexity on top of complexity in an attempt to just mock observability and come up with even more baffling points for misunderstanding? Will it be a helpful abstraction layer? Or yet another governance burden?

This is all somewhat like when sports announcers will show a tough replay and say to the audience, “You Make the Call!”

What Can You Do? Consider grounding in verified data sources via Retrieval Augmented Generation (RAG), just be sure to check on latest best practices for doing so. Maybe integrate confidence scoring to flag uncertain outputs for user review. Establish feedback loops for users to report errors, using that data to iterate on models and hybrid human oversight in critical paths. Use rubrics for testing, probably using an external firm. Consider different approaches; again, going to an article by Arthur Mello, take a look at “LLMs Predict Words. LCMs Predict Ideas.“

Model Output Risks: Dangerous Answers

Dangerous answers involve AI suggesting harmful actions, like recipes for toxins or self-harm advice, even unintentionally. The worry is real-world consequences: ethical breaches, legal liability, or public backlash if it enables danger. This could halt your offering entirely.

What Can You Do? Product managers can limit scope to safe domains in the initial design phase and embed content filters with keyword/context checks, while partnering with domain experts for custom safeguards. Develop incident response protocols including rapid model updates and user education pop-ups to highlight limitations, ensuring quick containment of any harmful outputs. (Just realize, even if you do all this if someone gets back a sketchy enough response, it’s going viral through the usual venues. Maybe to some lawyers. If bad enough, mainstream news. So consider legal / crisis PR issues as well, no matter how hard you work to avoid this.) Also, where appropriate, educate users on AI limitations, and collaborate with experts for domain-specific safeguards.

Model Output Risks: Intentional Jailbreak

There are multiple levels of bad we have to contend with. The mostly harmless are those just looking to break their big blog story with inappropriate answers. They want to be YouTube heroes for five seconds. They’ll maybe find some crafty prompt created by someone else and exercise it. This will likely be mostly a PR problem. Which doesn’t mean it might not be serious. Next, we have the other level. The true bad guys. They may be seeking to beat guardrails and use service(s) for bad intent. This is clearly more serious and harder to detect.

Intentional jailbreak means users deliberately tricking AI to bypass safety filters, like prompting it to generate harmful content. Concerns range from PR nightmares (e.g., viral screenshots of offensive outputs) to enabling real crimes if bad actors succeed. This can damage brand reputation or invite regulatory challenges.

What Can You Do? Mitigate jailbreaks by incorporating red-teaming into your testing cycles, simulating attacks to strengthen prompt engineering and system prompts in the product backlog. Implement user monitoring for suspicious patterns with rate limits and assemble a cross-functional response team to deploy fixes, turning transparency into a trust-building feature post-incident. Just… again… realize perfect is unlikely here. Someone intentionally trying to break you will probably find some prompt eventually. There’s dark tools out there that also help such people.

Model Output Risks: Bias / Fairness

Bias in AI refers to unintended prejudices baked into models, often from skewed training data, leading to discriminatory outcomes. (E.g., a hiring tool favoring certain demographics. You’ve probably seen some of the headlines.) Besides basic ethical issues, the concern is reputational damage, lawsuits for discrimination, or regulatory fines, especially in sensitive areas like finance or healthcare. It erodes trust and can alienate markets.

What Can You Do? Require diverse dataset audits in your supplier contracts and integrate fairness metrics into KPI tracking for ongoing model evaluations. Use diverse teams for product decisions and schedule post-launch audits. Your trying to ensure equitable performance across user groups. Mitigate by auditing datasets for diversity during development, using debiasing techniques (e.g., re-weighting samples), and incorporating fairness metrics in testing. (Example: Tools like Google’s What-If Tool can help.) Regular post-launch audits and diverse team input prevent surprises. (See: The What-If Tool: Interactive Probing of Machine Learning Models and Decoding AI Decisions: Elevating Transparency and Fairness with Google’s What-If Tool.)

Security / GRC: Security (Beyond Fraud)

There’s broader security risks beyond just fraud. These include adversarial inputs; crafted data that fools AI, like stickers on stop signs tricking self-driving cars or model poisoning during training. Whether this is just mischief or outright terrorism depends on your situation, including degree of scale or the actor or their intent; if you can even sort that out. Clear concerns include system failures causing real harm (e.g., safety incidents), IP theft if models are reverse-engineered, or cascading downtime affecting Service Level Agreements (SLAs). Products face eroded reliability KPIs and potential recalls.

What Can You Do? Mitigation involves robust testing: use adversarial training to harden models, implement input validation layers, and monitor for anomalies. Try for zero-trust architectures in your product requirements, and budget for regular penetration testing with varied vendors to uncover vulnerabilities. Doing things like this, (penetration tests and such), can perhaps make your overall product better anyway. These are, (my opinion), the more unfortunate costs to incur. It might not be overly professional to express it this way, but doesn’t it kind of suck pretty bad that the threat surface area has become so great? Again, consider mixing up testing vendors every so often. They’ll all find somewhat different things. Costs be thousands to millions depending on scope.

GRC: Privacy / Data Governance

This is how AI handles personal data, from collection to usage, under laws like GDPR or CCPA. Concerns include breaches exposing user info, leading to massive fines (e.g., millions in penalties) or class-action suits, plus loss of competitive edge if users bail due to privacy fears. PMs risk stalled launches if compliance isn’t baked in, or rework costs from retrofitting.

What Can You Do? Use privacy-by-design: anonymize data early, use differential privacy levels if appropriate, and conduct impact assessments. Use data catalogs for governance and limit retention policies, partnering with legal to ensure compliance and avoiding rework by baking these into user flows early. If I were to re-do my career, I’d maybe have been a plaintiff’s lawyer with expertise in this area as it’s going to continue to be a rich hunting ground. It’s a whole cottage industry just waiting for you to screw up in this minefield even just a little.

The reality is you’ll probably have holes all over the place, especially if you’re using third party models. Startups? Probably don’t care. Or maybe they do, but the more powerful driver will be, “Let’s get to MVP first, then worry about closing any holes. We have nothing right now, so we’re judgement proof anyway.” This may be sad, but we’ve seen this movie and we see the current attitude of speed over quality in a lot of places. (Or even if not “judgement proof” per se, they may make the calculation that we’ll take the risks to get to market because we’d rather die in battle vs. running out of runway building “non functional” capabilities. As to Large Corporate? Well, there’s a lot more to lose there. The fast-moving start-up crowd often laughs at the slow dinosaurs. But one larger corporate strategic marketplace claims might be better customer safety, because they have to manage compliance better than most startups anyway.

GRC: Regulatory / Compliance

AI regulations are evolving, with frameworks like the EU AI Act classifying systems into risk levels (unacceptable, high, limited, minimal) and imposing requirements like transparency reports. One worry is non-compliance leading to bans, audits, or market exclusions. (Think about your product getting pulled from Europe overnight, though people are often fond of fining you lots of money first.) For PMs, this could delay roadmaps or force pivots, inflating costs.

What Can You Do? Mitigate by staying ahead: map your AI to regulatory categories early (e.g., via NIST’s AI Risk Management Framework (RMF)), build in audit trails for explainability, and partner with legal teams for ongoing monitoring. Use voluntary standards like ISO for AI to future-proof, and design modular features that can adapt to new rules without full rebuilds. Remember, what the EU thinks is legitimate enforcement is seen by others as a great way to just tax international firms. Even though EU tech chiefs say they don’t target US tech, others say Europe’s GDPR Fines Against US Firms Are Unfair and Disproportionate. Bottom Line? Leaving aside ethical issues or customer issues, you could get… what’s the technical term? Oh yes, completely walloped over the head in both U.S. and elsewhere. Whatever the political arguments may be, enjoy reading the 20 biggest GDPR fines so far [2025]. For big firms, it’s a significant liability. For small ones? Potential company killers.

GRC: Ethical / Societal Issues

You can kind of mostly ignore this, right? I mean, really. You have a product to put out. It’s almost pointless for me to include this here as a) it’s being talked about everywhere and is an obvious concern, b) it’s a book-length, (at least), issue, and c) no one has really solved any of these big issues yet anyway. Even finding some of the right questions doesn’t seem all that clear.

So just for completeness and in case you’re new to this, here’s a quick summary: This higher level ethics in this realm encompasses broader harms. (Think job displacement from automation or AI amplifying misinformation on a societal scale.) The concern is backlash, from boycotts, negative PR, to ethical dilemmas stalling innovation. PMs might see talent flight if a company ignores this, or long-term market shifts. You can mitigate with ethical frameworks: conduct impact assessments pre-launch, involve ethicists in product decisions, and transparently communicate limitations. Perhaps you can add features like user controls for AI outputs, and align with corporate social responsibility goals to turn ethics into a differentiator, boosting brand loyalty.

My perspective on this right now is somewhat dark. (And no, I’m not talking about some Terminator scenario here.) I’m generally an optimist, but in this space, (getting towards the end of 2025), the reality is a lot of folks building product here still don’t even know just how some of this tech works. And even in the best cases, there’s challenges in mitigating some issues. Meanwhile, there’s pressure to check off the “We’re Using AI Somewhere!” box. There’s a lot of talk about ethics, but again, the rush to “win” seems to beat taking time to consider concerns first. In fairness, it’s incredibly hard to anticipate some outcomes and sometimes the only way to adjust things is after you have experiential data. The scope of this particular issue is often far beyond what a lot of us are building. Still, it’s worth keeping in mind. For a big picture view of some of this, see “The Age of AI: And Our Human Future” by Kissinger, Schmidt and Huttenlocher.

Repeating the closing ideas from Part 1 now…

Wrapping Up

Navigating the risks of agentic AI and workflows is in a lot of ways going to be more challenging than typical traditional risk management. There’s a lot of so-called “known unknowns” and likely some pure “unknowns.” The challenge is generally in being a lot more proactive. You need a blend of vigilance, technical safeguards, and strategic planning. Mostly, we’re really talking about mitigations, like cost controls, layered security, and ethical guardrails. However, no system is foolproof, especially with general-purpose tools where gaps persist. We’ll need to have a mindset of continuous improvement, backed by monitoring, testing, and crisis readiness, to ensure our products not only deliver value but also maintain trust and safety in a fast-evolving marketplace that likely will also have increased threat surface areas.

See Also: